Why do all Machine Learning models follow the same steps?

Understand how all Machine Learning Models follow the same procedure over and over again

Introduction

It's tough to find things that always work the same way in programming.

The steps of a Machine Learning (ML) model can be an exception.

Each time we want to compute a model (mathematical equation) and make predictions with it, we would always make the following steps:

model.fit()→ to compute the numbers of the mathematical equation..model.predict()→ to calculate predictions through the mathematical equation.model.score()→ to measure how good the model's predictions are.

And I am going to show you this with 3 different ML models.

DecisionTreeClassifier()RandomForestClassifier()LogisticRegression()

Load the Data

But first, let's load a dataset from CIS executing the lines of code below:

- The goal of this dataset is

- To predict

internet_usageof people (rows)- Based on their socio-demographical characteristics (columns)

import pandas as pd

df = pd.read_csv('https://raw.githubusercontent.com/jsulopz/data/main/internet_usage_spain.csv')

df.head()

| internet_usage | sex | age | education | |

|---|---|---|---|---|

| 0 | 0 | Female | 66 | Elementary |

| 1 | 1 | Male | 72 | Elementary |

| 2 | 1 | Male | 48 | University |

| 3 | 0 | Male | 59 | PhD |

| 4 | 1 | Female | 44 | PhD |

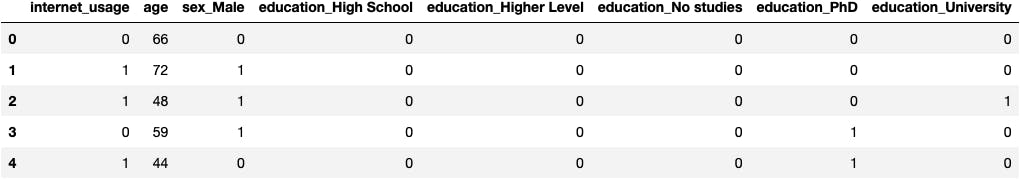

Data Preprocessing

We need to transform the categorical variables to dummy variables before computing the models:

df = pd.get_dummies(df, drop_first=True)

df.head()

Feature Selection

Now we separate the variables on their respective role within the model:

target = df.internet_usage

explanatory = df.drop(columns='internet_usage')

ML Models

Decision Tree Classifier

from sklearn.tree import DecisionTreeClassifier

model = DecisionTreeClassifier()

model.fit(X=explanatory, y=target)

pred_dt = model.predict(X=explanatory)

accuracy_dt = model.score(X=explanatory, y=target)

Support Vector Machines

from sklearn.svm import SVC

model = SVC()

model.fit(X=explanatory, y=target)

pred_sv = model.predict(X=explanatory)

accuracy_sv = model.score(X=explanatory, y=target)

K Nearest Neighbour

from sklearn.neighbors import KNeighborsClassifier

model = KNeighborsClassifier()

model.fit(X=explanatory, y=target)

pred_kn = model.predict(X=explanatory)

accuracy_kn = model.score(X=explanatory, y=target)

The only thing that changes are the results of the prediction. The models are different. But they all follow the same steps that we described at the beginning:

model.fit()→ to compute the mathematical formula of the modelmodel.predict()→ to calculate predictions through the mathematical formulamodel.score()→ to get the success ratio of the model

Comparing Predictions

You may observe in the following table how the different models make different predictions, which often doesn't coincide with reality (misclassification).

For example, model_svm doesn't correctly predict the row 214; as if this person used internet pred_svm=1, but they didn't: internet_usage for 214 in reality is 0.

df_pred = pd.DataFrame({'internet_usage': df.internet_usage,

'pred_dt': pred_dt,

'pred_svm': pred_sv,

'pred_lr': pred_kn})

df_pred.sample(10, random_state=7)

| internet_usage | pred_dt | pred_svm | pred_lr | |

|---|---|---|---|---|

| 214 | 0 | 0 | 1 | 0 |

| 2142 | 1 | 1 | 1 | 1 |

| 1680 | 1 | 0 | 0 | 0 |

| 1522 | 1 | 1 | 1 | 1 |

| 325 | 1 | 1 | 1 | 1 |

| 2283 | 1 | 1 | 1 | 1 |

| 1263 | 0 | 0 | 0 | 0 |

| 993 | 0 | 0 | 0 | 0 |

| 26 | 1 | 1 | 1 | 1 |

| 2190 | 0 | 0 | 0 | 0 |

Choose Best Model

Then, we could choose the model with a higher number of successes on predicting the reality.

df_accuracy = pd.DataFrame({'accuracy': [accuracy_dt, accuracy_sv, accuracy_kn]},

index = ['DecisionTreeClassifier()', 'SVC()', 'KNeighborsClassifier()'])

df_accuracy

| accuracy | |

|---|---|

| DecisionTreeClassifier() | 0.859878 |

| SVC() | 0.783707 |

| KNeighborsClassifier() | 0.827291 |

Which is the best model here?

- Let me know in the comments below ↓